Machine Learning Projects

ML projects including Bayes, K-Means, Ensemble Learning

These lab projects are for the Introduction to Machine Learning class at Tsinghua University, taught by Prof. Min Zhang. In total consists of the following lab projects:

-

Lab 1: Naive Bayes Classifier

Implemented a Naive Bayes Classifier with Laplace smoothing and feature design on a real dataset for spam email classification. Evaluated the model using accuracy, precision, recall, and F1 score, with results showcasing over 99% accuracy under optimal configurations. -

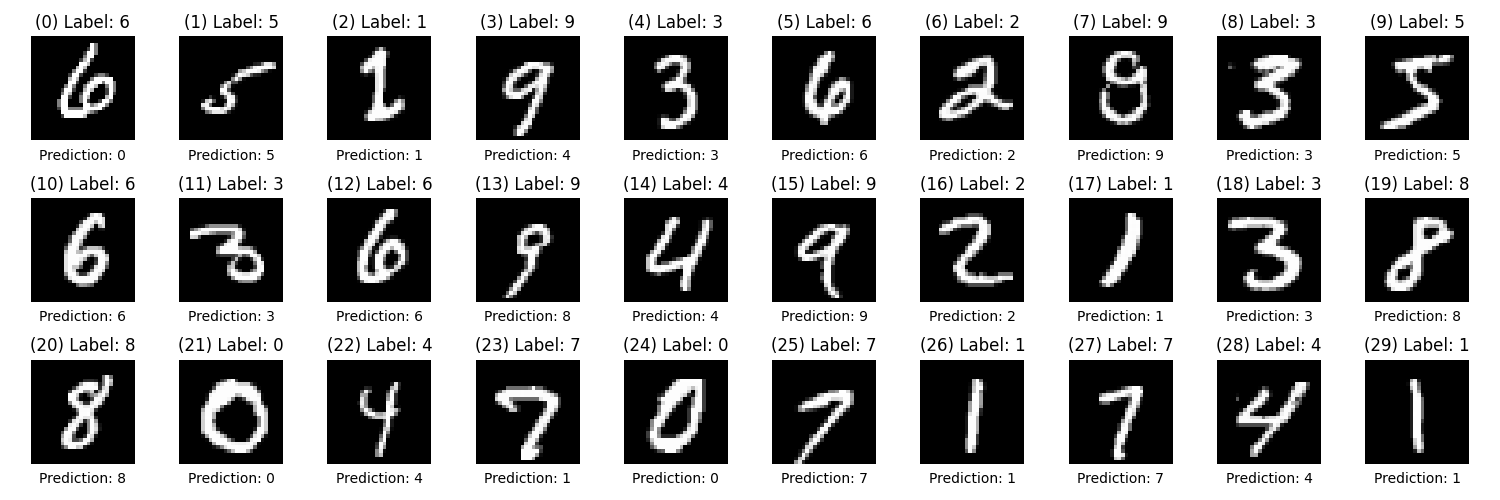

Lab 2: K-Means Clustering Algorithm

Designed a K-Means clustering algorithm on the MNIST dataset, analyzing the clustering accuracy and visualizing results with t-SNE. Achieved an accuracy of 79% with 30 clusters, highlighting challenges in cluster initialization and high-dimensional data separation.

-

Lab 3: Ensemble Learning with Bagging and AdaBoost

Developed ensemble models combining Bagging and AdaBoost with SVM and Decision Tree classifiers to predict product review scores. Compared the models' performance using MAE, MSE, and RMSE, showing mixed results with Bagging SVM performing slightly better, while AdaBoost exhibited limited effectiveness for this dataset.

Check out the code implementation and report on Github